Logging in Python

Mon Sep 04 2023E.W.Ayers

Logging is quite complicated in Python. This is more of a prescriptive article.

References:

Python Logging Howto, including basic and advanced tutorials.

Python Logging Cookbook. Common 'recipes' for logging setups.

1. How to log.

Use print if it's part of the actual program output. Eg if you were writing a grep-like tool, you should use print to print the output lines of the search. You should avoid the temptation to output information messages like "loading" or "found 10 files" using print, it's really annoying to turn off if you end up with someone else using your code.

Remember that your code might be used from another app, and it might not be possible to modify your source, and if your module is using print it could wreck the output of this downstream tool.

Some programs expect very precise machine-readable output on stdout, using print for chirpy information can make your code unusable by others.

A good habit is to give each of your Python source files its own 'logger'.

import logginglogger = logging.getLogger(__name__)

The __name__ field will be my_package.my_module, so all of the logs from this logger will be forwarded to the parent logging.getLogger('my_package').

But don't be afraid to have multiple loggers for different 'channels' if it meakes sense. Eg you could have my_package.access for logging each time your webserver is accessed.

logger.error()should be used to print errors that are recoverable, but which the developer should know about. If your production code is emittinglogger.errormessages, it should be picked up and stopped.logger.exception('oh no')is the same aslogger.error, but it will also log the stack trace if you are in an exception handler.logger.warnshould be used to emit warnings.There is also a

warningsbuiltin module, that lets you 'raise' warnings like errors. Imo you should usewarningsinstead oflogger.warnwhen the warning is something that a developer who is using your module needs to be aware of. For example if they are using a deprecated method or they are doing something inefficiently. Then uselogger.warnif the end-user or production version of the app emits the warning (eg to make warnings appear in your cloud logs).logger.infois for informational messages that are not annoying or tmi. They are messages that a user of the module will always reasonably want to see.logger.debugis a firehose of information that you want to see while developing the app. Do whatever you want that works for you.

2. How to manage logs

The way to think about logs is that they are a stream of LogRecords, each record has the following information

name: Which 'logger' emitted it, this should be a dot-separated string list. This dot-name convention effectively sets up a hierarchy of loggers.level: whether the log is error, warn, info, debug.pathname,lineno,func, etc: source file location where the log was emitted.sinfo,exc_info,process,thread, etc: information about the stack and exception when the log was made.createdThe time when the log record was created.msg, is the object that is logged. It doesn't have to be a string, by defaultstr()will be called to determine the string.

So we have a firehose of LogRecord objects, and we need to translate these into something useful. The best way to think about this is to first consider what the 'sinks' of your logs are. That is, the final destinations of the logs.

You define these using logging.Handler objects.

These could be:

a file

a cloud logging platform like Google Cloud Logging.

output them to

stderr(I highly highly recommend never outputting logs tostdout.)

For each of these sinks, we usually don't want to show all the logs because it would be too cluttered.

So we need to define filters for the logs.

This is done with logging.Filter.

To make a filter you subclass logging.Filter and override the filter(self, record) -> bool method. Note that you are also allowed to mutate the record, so you can also use filters as mappers for your log records. This is really useful for adding extra information to the records like request context.

We usually also need to convert these LogRecord objects into text in some way, ideally the formatting should be consistent for all the different logs.

This is done with logging.Formatter.

2.1. Plugging these together

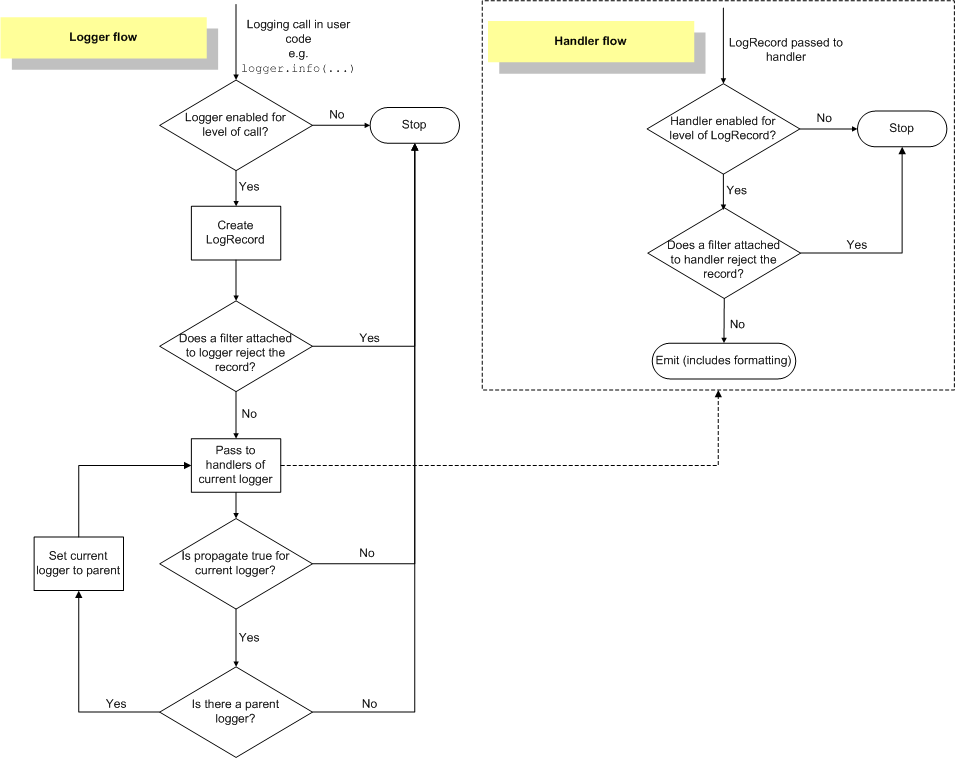

Python's howto guide has a good flowchart

Handlers are attached toLoggers.Filters can be attached toLoggers orHandlerseach

Handlercan have aFormatter, but there is a per-module fallback too.

2.2. Use rich

I highly recommend using the Rich library for formatting logs. Here is their example:

import loggingfrom rich.logging import RichHandlerlogging.basicConfig(level="NOTSET", format="%(message)s", datefmt="[%X]", handlers=[RichHandler()])log = logging.getLogger("rich")log.info("Hello, World!")

2.3. What does logging.basicConfig do?

It adds handlers, formatter to the root logger, unless it already has these set.

3. Just don't do it

3.1. Don't use print to log info

See above.

3.2. Don't filter logs where they are logged

Ie don't have code like if use_logs:\n logger.info('foo') in your function. Just always log, and let the log filters be in charge of deciding what is worth emitting.

3.3. Avoid passing loggers as function arguments or as class attributes

As argued in the docs, loggers are singletons so you don't need to hold references to them, just get them with logging.getLogger.

3.4. Don't put logging handlers and filters outside of a program entry point

The entry point of a program should be in charge of setting logging configuration (ie: what the handlers are, what is filtered, logging level, formatting).

If you have logging output setup somewhere halfway through your program, it means that others can't use your code as a library because it's spewing logs to somewhere it's not wanted.

See also the docs.

3.5. Don't include information that is already in LogRecord

Do not add to the log message:

the call site of the log.

when the log was emitted

stack and exception information

a string saying something like

warning:orerror:, this is already shown from the logging level.

Instead attach a formatter that includes this information.

3.6. Don't use logguru

There is a tool called LogGuru but my advice is to ignore it and just use the standard python logging system. There should only be one way to do logging, it's already frustrating enough without multiple ways of doing it.

3.7. Don't bother with LoggerAdapter

There is a feature in logging called LoggerAdapter, you subclass LoggerAdapter and then use it as a wrapper around your Logger, now whenever you call LoggerAdapter.info('asdf', **kwargs) it will first call LoggerAdapter.process('asdf', kwargs), and this is then sent to the wrapped Logger.

The idea is that if you are writing a webserver or some other thing with contextual information, you can wrap your logger in an adapter that injects the contextual information and feed that adapted logger to all of the handler methods.

But I think this is a pretty rubbish solution because you still need to explicitly pass around this LoggerAdapter object.

I think a much better approach is to use contextvars and an 'injecting filter' described here.

4. Logging with Flask, FastAPI, Dash

There are a few libraries that will decide to attach logging handlers for you. So to avoid repeated logging you need to remove some handlers.

Flask's logger is found at app.logger, by default (ie, if there is no handler attached to the root logger) it will add a StreamHandler to app.logger, but you can remove this.

Sometimes Flask uses a library called 'Werkzeug', which will by default attach a stream handler logging.getLogger('werkzeug').

Another library Uvicorn does its own thing with loggers.

5. How to override stuff

5.1. More detail on Handlers

The entry point for a Handler is handle(record).

def handle(self, record):rv = self.filter(record)if rv:self.acquire()try:self.emit(record)finally:self.release()return rv

If you write your own handler, you should override some of the following:

emit(record)should contain the logic for actually writing the log. Note that to convert the record to a string you should callself.format(record)somewhere inemit.flushwill get called to push all the logs up to some resource (eg if you are buffering logs).closewill get called at the end of life for the handler, you can override it to clean up your resources.

Avoid overriding handle, format, acquire, release, handleError.